Four radar modules are combined to ensure full 360° coverage. AI algorithms work through the collected data to identify whether a person in that range has suffered a fall, with the radar system being able to track more than 30 people in spaces of up to 1600 square feet with an angular resolution of 12°. And for added object detection capabilities, passive transponders were developed to work with the radar system.

Supported by the Ministry of Education and Research, the OMNICONNECT project has brough together researchers from the Fraunhofer Institute for Reliability and Microintegration IZM with partners from Berlin and Oldenburg. Their mission: To develop a miniature radar system that can detect medical emergencies or other situations that require a carer’s intervention, while respecting the privacy of the users. By contrast to cameras or CCTV systems that record images, the system only tracks patterns of movement. It is integrated into a ceiling light, making it easy to install, unobtrusive, and easy to accept for the people living with it.

The system works with AI-supported radar modules and passive transponders that can be placed on different objects, thus for the first time enabling the tracking of movement and location with a single radar system. The transponders work as frequency-specific radar targets, with each resonating at a known frequency. This turns them into uniquely identifiable beacons that the system can scan for and whose precise location can be calculated by checking the time needed for the signal to bounce back.

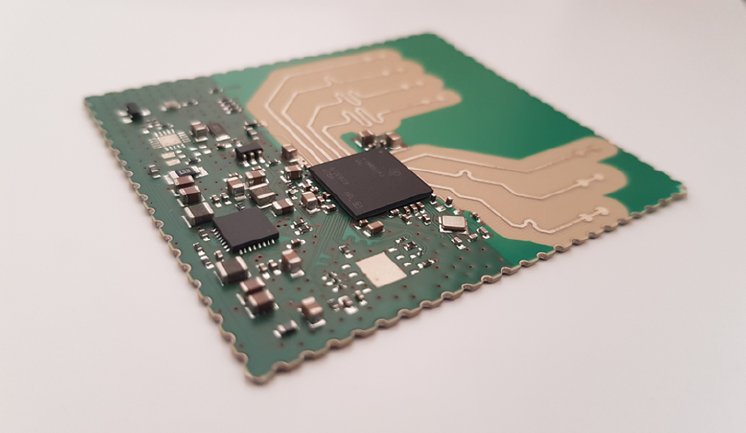

The data is processed on site by a field-programmable gate array or FPGA, a tiny computing system with integrated processor. It shares the object and motion data with an AI-based system that can recognize movements and patterns, developed by the computer science researchers at the OFFIS Institute of the University of Oldenburg. To control the app and identify specific options, a special app interface was built by HFC Human-Factors-Consult GmbH.

The demonstration unit designed and constructed at Fraunhofer IZM has undergone live trials and proven its ability to detect an object’s position with an accuracy of five centimeters in a ten-meter range. The demonstrator is currently being used by the project partners in several possible use cases. The data it collects can be used to produce certain movement patterns of typical behaviors and, with enough meaningful data available, feed into possible assisted living applications or be used to recognize certain incidents. The position data could be used to check whether the detected person is feeling well or whether a medical or care intervention is indicated.

The OMNICONNECT project ran from 1 November 2019 to 31 December 2022, supported by funding from the German Ministry of Education and Research (funding ID 16SV8310).